Here’s a $3 billion question: Can your process mining tool detect fraud?

Wells Fargo’s couldn’t.

In 2016, it was revealed that employees had opened over 2 million fake accounts to meet aggressive sales targets. If you had looked at their process mining dashboards at the time, everything would have looked perfect. Cycle times were optimized, exception rates were sub-1%, and the "Happy Path" was being followed with textbook efficiency.

The process looked right. The meaning was catastrophically wrong.

This is the "Dashboard Dilemma." We’ve spent the last decade building NASA-style control rooms to monitor how data moves through our systems, while remaining completely blind to what that data actually means. We are drowning in process maps but starving for semantic understanding.

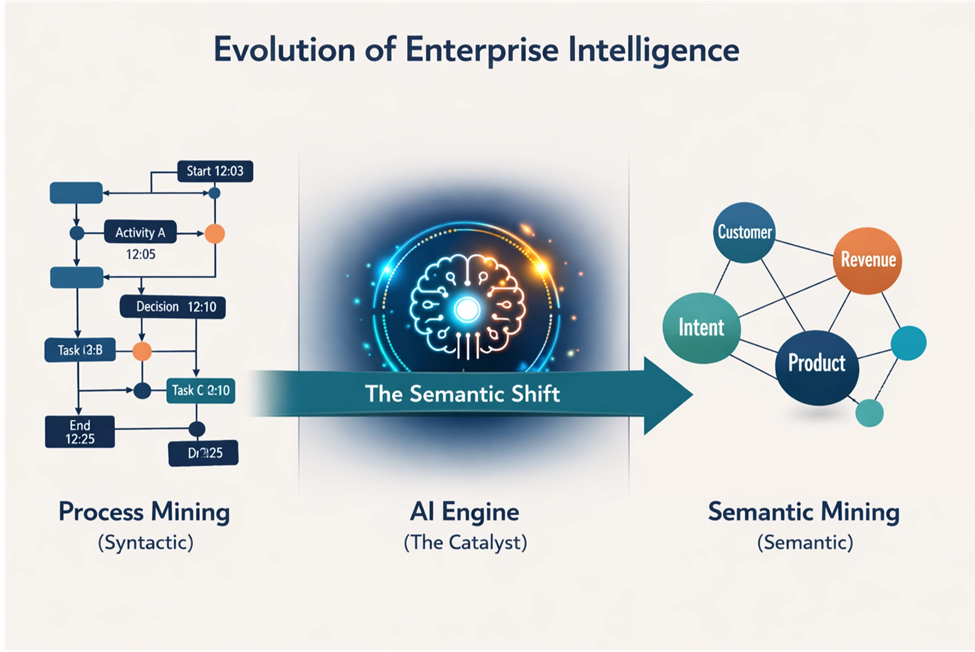

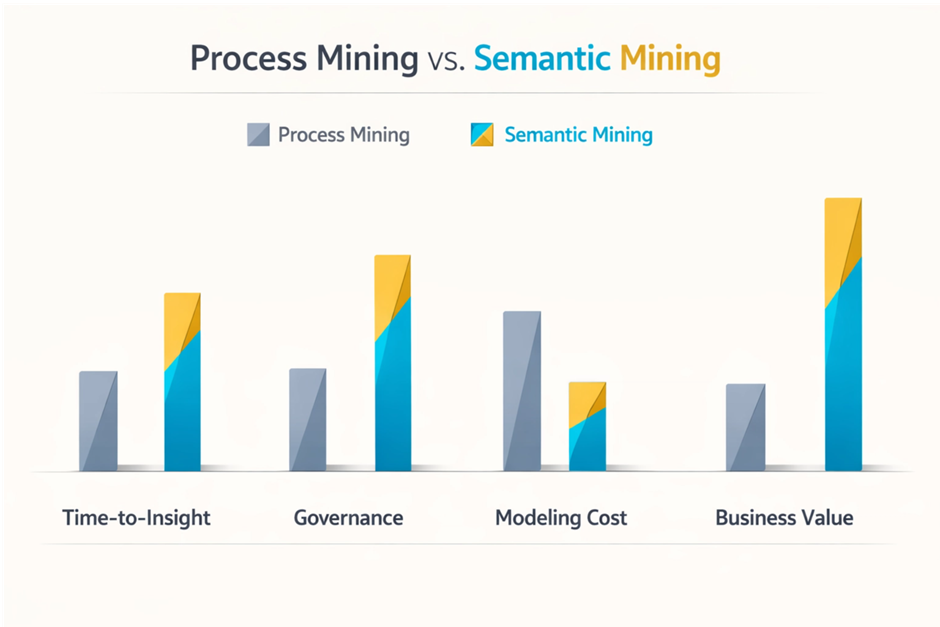

The next evolution isn't just "better" process mining. It’s Semantic Mining—and it’s finally moving us from syntactic efficiency to actual business intelligence.

The Illusion of Automation: Lessons from the Trenches

Process mining was supposed to be the end of "gut feeling" management. By treating event logs as the ground truth, we could finally see what was actually happening in our ERPs and CRMs.

And for some, it worked. Take GSK, for example. They are often cited as a success story, and rightfully so. But here’s what the glossy case studies usually leave out: to make it work, they needed a small army of 150 full-time employees, wrote over 70 custom SQL statements, and had to have domain experts manually estimate service times because the event logs were incomplete.

That’s not automation. That’s brute force with an algorithm attached.

For the rest of the enterprise world, the results have been less stellar. HFS Research found that a staggering 66% of process mining initiatives either disappointed stakeholders or under-delivered on their promises.

Why? Because most enterprise processes don't look like a clean assembly line. They look like a bowl of spaghetti that exploded. I once saw a healthcare client print their process map on a 15-foot poster. It was a tangled mess of thousands of concurrent paths and ad-hoc activities. The COO took one look and said, "If this is what our process actually looks like, we have bigger problems than efficiency."

She was right. Process mining showed them the chaos. It couldn't tell them which parts were broken and which parts were keeping patients alive.

The Semantic Gap: When "Efficient" is Dangerous

The fundamental limitation of process mining is that it is syntactic. It understands the structure of the logs—the `case_id`, the `activity`, the `timestamp`—but it has no concept of intent.

When Wells Fargo’s dashboards showed "perfect efficiency" in account openings, they were technically accurate. The process was efficient. Process mining would have shown perfect metrics. But it couldn't see the semantic violation because it can't distinguish between:

- "Account created with valid KYC" (process compliance ✓)

- "Account created with customer authorization" (semantic reality ✗)

The fix after the scandal? Wells Fargo reintroduced branch managers to approve new accounts—not to check compliance rules (the system does that), but to make semantic judgments: "Does opening 30 accounts for one person in a single day make business sense, even if it technically passes all checks?"

That's human-in-the-loop semantic validation that no process map can replace. But because the system didn't understand the semantics—the relationship between a customer’s actual intent and the account creation—it missed the fraud entirely.

Semantic Mining is inspired by the rigor of process mining but adds the layer we've been missing: understanding what the data means. It asks the questions that a dashboard can't:

Questions like: Does this "Invoice Approved" activity in the ERP actually align with the "Contract Signed" intent in the CRM? Why does Finance define "Customer Value" in net revenue while Sales defines it in gross contract value? Is this process variation a bottleneck to be fixed, or a critical regulatory safeguard?

Take Palantir Foundry's approach to this problem. Instead of just visualizing process sequences, Foundry connects process data to an Ontology—a semantic layer that models real-world business concepts.

Here's what that means in practice: You're not just seeing "Invoice 12345 approved at 3:42pm." You're seeing "Invoice 12345 (Customer: Acme Corp, Contract: SVC-2024-001, Amount: $47K, Approver: Bob Jones, Authority Level: $50K) approved at 3:42pm."

Now you can ask semantic questions that process mining can't answer:

- Show me all invoices approved by managers without sufficient authority

- Which customers have invoices exceeding their contract limits?

- Are there invoices approved with no active underlying contract?

This is the ontology connecting process mechanics to business meaning—turning sequences into understanding.

But here's the limitation that Palantir's marketing doesn't emphasize: someone still has to build and maintain that ontology. Every Object, every Link, every relationship requires human data modelers to define it. When your business changes or systems evolve, the ontology needs manual updates.

That manual bottleneck is exactly where AI-driven semantic discovery changes the game.

The Revenue Recognition Nightmare: Another Semantic Failure

Let's consider another common enterprise disaster: revenue recognition. For most companies, recognizing revenue isn't a simple transaction. It's a complex dance between sales contracts, delivery milestones, customer acceptance, and accounting rules (like IFRS 15 or ASC 606).

Imagine a scenario where Sales books a large software license deal. Their system registers the full contract value immediately. Finance, however, must recognize that revenue over the license period, or only upon specific delivery of features. Process mining might show a smooth flow from "Deal Closed" to "Invoice Sent" to "Payment Received." Perfect efficiency!

But if the underlying semantic definitions of "revenue" differ between Sales and Finance—if Sales counts bookings as revenue, while Finance adheres strictly to GAAP/IFRS principles—you have a ticking time bomb. The dashboards look green, but the financial statements are materially misstated. This isn't a process bottleneck; it's a semantic conflict that can lead to restatements, regulatory fines, and a complete loss of investor trust.

Process mining would show how the data moved. Semantic mining would highlight that the definition of "revenue" is inconsistent across departments, leading to a false sense of financial health. It would identify the semantic discrepancies between the Sales CRM and the ERP's General Ledger, allowing for proactive reconciliation before the auditors come knocking.

How Semantic Mining Actually Works

Why Humans Aren't the Bottleneck—They're the Design

Remember GSK's process mining success? The glossy case study calls it automation. The reality: 150 people making it work.

But that's not a failure. It's the point.

Semantic mining isn't about removing humans from the loop—it's about positioning them correctly. AI handles the high-volume, high-confidence pattern matching: "These three columns in different systems are calculating the same thing." Humans handle the judgment calls: "Which definition is the official one? What happens to existing reports when we change it?"

When ASC 606 revenue recognition rules changed, no algorithm could decide what "control transfer" meant for each company's specific contracts. That required finance teams, legal teams, and domain experts working together for months.

The goal isn't full automation. It's symbiotic intelligence: AI discovers semantic conflicts at scale, humans resolve them with authority and context.

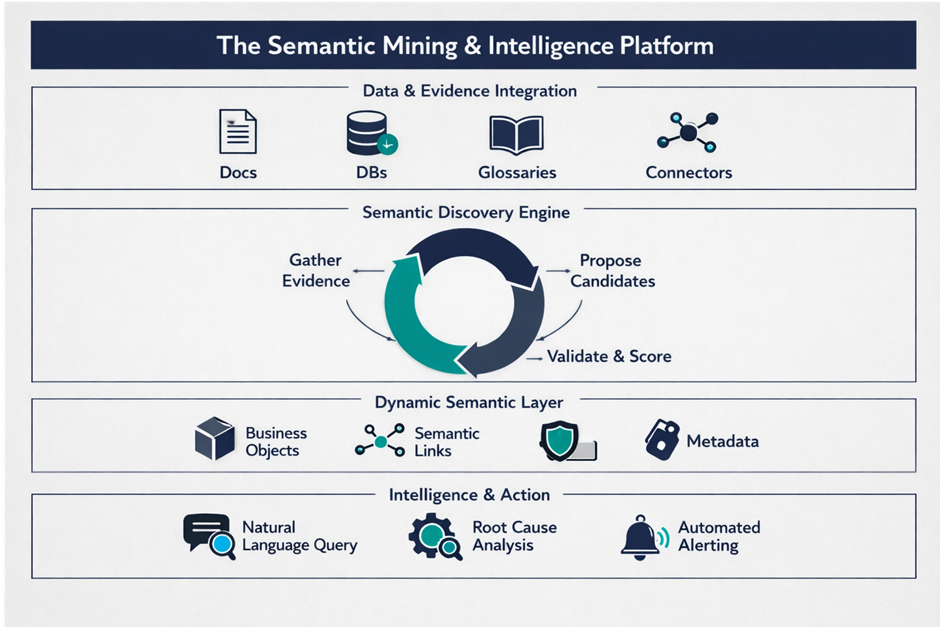

So, how do you actually "mine" meaning out of a mess of databases?

It starts by pointing AI at the evidence people usually ignore: not just the schemas, but the documentation, the business glossaries, and most importantly, how people actually query the data. Usage patterns tell the truth that documentation hides.

Imagine you have three different columns in three different systems that all claim to be "Customer Lifetime Value." In a traditional setup, you’d need a six-month project and ten meetings to figure out which one to trust.

An AI-driven Semantic Mining engine reads the context. It sees that Finance is calculating CLV in dollars, Marketing in cents, and Product is including projected future value. It flags the conflict: "Hey, you have three different definitions for the same concept. Which one is the 'Official' one?"

Usually, it’s right. Sometimes, it surfaces disasters you didn't know existed—like the time a DACH region churn spike was caused simply because the retention team was chasing a "high-risk" flag that the billing system didn't even recognize. One semantic conflict, €2M in lost revenue.

This AI Engine, inspired by the ontological approach of platforms like Foundry, takes it a step further. It doesn't just model the world; it discovers the semantic relationships dynamically. It uses advanced techniques to:

- Automate Semantic Discovery: Leveraging large language models (LLMs) to understand the intent behind data, analyzing documentation, query logs, and business metadata to propose semantic concepts.

- Enable Agentic Reasoning: Employing specialized AI agents that can reason about data, identifying that differently named columns in separate systems represent the same business entity by mining their usage context.

- Build a Dynamic Semantic Layer: Continuously updating and maintaining a living semantic layer, where high-confidence proposals are automatically persisted, and human-in-the-loop validation ensures accuracy for complex cases.

Implementing Semantic Mining: Where to Start

Ready to move beyond the spaghetti and start mining meaning? Here are some practical steps and red flags to look for:

1. Identify Your Semantic Hotspots: Where do different departments use the same terms with different meanings? "Customer," "Revenue," "Product," "Order" are common culprits. These are your prime candidates for semantic conflict resolution.

2. Look for "Dashboard Dilemmas": If your leadership team is constantly questioning dashboard numbers, or if different reports for the same metric show wildly different results, you have a semantic problem, not just a data quality issue.

3. Prioritize Business-Critical Processes: Start with areas where semantic inconsistencies have the highest financial or regulatory impact (e.g., financial reporting, compliance, customer churn analysis).

4. Embrace Human-in-the-Loop: Don't aim for 100% automation from day one. The AI Engine is powerful, but human domain experts are crucial for validating complex semantic proposals and refining the system over time.

5. Evaluate Tools for Semantic Discovery, Not Just Mapping: Look for platforms that offer more than just process visualization. Seek out capabilities for automated metadata extraction, LLM-driven semantic concept proposal, and dynamic ontology management. The goal is to discover meaning, not just to manually define it.

Beyond the Dashboard

We need to stop building dashboards that only monitor the "how." The future of enterprise intelligence lies in understanding the "what."

Process mining answers "What happened?"

Semantic mining answers "What should have happened—and why does it matter?"

Wells Fargo had perfect answers to the first question while catastrophically failing at the second. If your dashboards show perfect efficiency while your business logic is in shambles, you don't have a process problem. You have a semantic problem.

It’s time to stop mapping the spaghetti and start mining the meaning. Because at the end of the day, a beautiful dashboard that tells you the wrong thing is just a very expensive lie.